I am a Senior Research Scientist in AR/VR at Meta Reality Labs and I obtained my PhD from the Mobile Systems Research Lab, University of Cambridge. My work focuses on developing data-efficient and scalable machine learning algorithms, particularly for mobile systems. I specialize in developing robust AI models that can handle complex, real-world scenarios by leveraging data-efficient approaches, including semi-supervised and self-supervised learning.

My key research areas include:

- Scalable and Data-efficient Machine Learning for Human Activity Recognition: I develop novel training algorithms that reduce data dependence while ensuring robust recognition in mobile applications. My work leverages semi-supervised and self-supervised learning techniques for human activity recognition, utilizing methods such as multimodal learning [TSALM @ NeurIPS 2024], contrastive learning [HCRL @ AAAI 2024, ML4MH @ NeurIPS 2020], self-training [IWMUT 2021], and multi-device collaboration [IWMUT 2022].

- Overcoming Catastrophic Forgetting in Continual Learning: I investigate strategies to address the challenges of catastrophic forgetting in continual learning, where models must learn from evolving data streams without losing previously acquired knowledge. My work focuses on approaches that balance stability and plasticity, ensuring models can generalize effectively across new tasks while retaining performance on past tasks [WACV 2024, ICASSP 2022].

- Federated Learning for Scalable Learning Algorithms: I explore decentralized machine learning paradigms that prioritize data privacy and enable collaborative learning across distributed devices [ICML 2022].

- AI for Health-related Applications: I apply machine learning techniques to health-related challenges, leveraging AI to enhance the accuracy and scalability of health monitoring applications [ML4H 2021, Nat. Mach. Intell. 2020].

I am passionate about leveraging AI to advance human-centric applications, from healthcare to mobile systems. By developing robust and scalable solutions, I aim to contribute to the future of ubiquitous computing, creating technologies that seamlessly integrate into and enhance our daily lives.

News

June 2025 - I have started a new role as a Senior Research Scientist in Wearables at Meta Reality Labs!

May 2025 - Call for participation! I will be running the GenAI4HS (Generative AI and Foundation Models for Human Sensing Workshop) at UbiComp 2025 in Espoo, Finland (on 12, 13 October 2025). Preliminary and early works are welcome! Click here to learn more!

May 2025 - Papers acceptance news! Our survey paper Past, Present, and Future of Sensor Based Human Activity Recognition using Wearables: A Surveying Tutorial on a Still Challenging Task has been accepted to IMWUT, and another paper BioQ: Towards Context-Aware Multi-Device Collaboration with Bio-cues has been accepted for a presentation at SenSys.

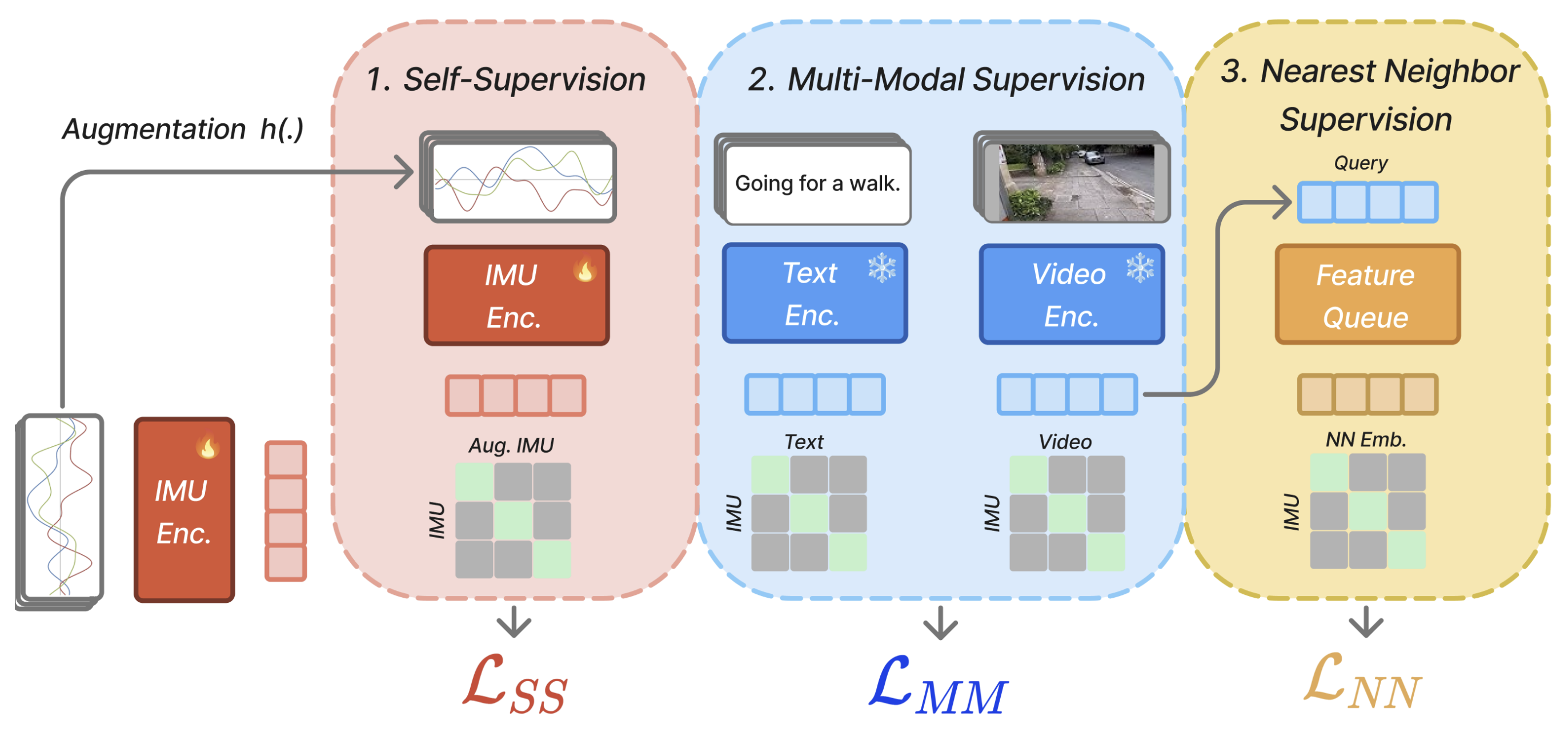

December 2024 - I will be attending NeurIPS in Vancouver this December with our summer intern, Arnav. We will be presenting our method, PRetraining IMU encoderS (PRIMUS), which explores multimodal learning from text and video data for IMU time-series. The presentation will take place on Sunday, 15 December, in Meeting Room 220-222 during the poster sessions: 9:34 AM - 10:35 AM and 1:00 PM - 2:00 PM of the TSALM workshop. Feel free to drop by to discuss recent developments in multimodal foundation models for personal and wearable applications.

September 2024 - I will be running the second version of the UbiComp SOAR Tutorial on solving the activity recognition problem from 1:00 PM to 5:00 PM on October 6 at Melbourne Australia. Come and join us for an exciting discussion!

Works/Publications

2025

Past, Present, and Future of Sensor Based Human Activity Recognition using Wearables: A Surveying Tutorial on a Still Challenging Task

Harish Haresamudram, Chi Ian Tang, Sungho Suh, Paul Lukowicz, Thomas Ploetz

In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT). Volume 9 Issue 2, Article 34 (March 2025).

BioQ: Towards Context-Aware Multi-Device Collaboration with Bio-cues

Adiba Orzikulova, Diana Vasile, Chi Ian Tang, Fahim Kawsar, Sung-Ju Lee, Chulhong Min

In SenSys 2025 (ACM Conference on Embedded Networked Sensor Systems)

PRIMUS: Pretraining IMU Encoders with Multimodal Self-Supervision

Arnav Das, Chi Ian Tang, Fahim Kawsar, Mohammad Malekzadeh

In ICASSP 2025 Lecture (Oral Presentation) 🏆

Also in NeurIPS 2024 Workshop: Time Series in the Age of Large Models (TSALM)

2024

Solving the Sensor-Based Activity Recognition Problem (SOAR): Self-Supervised, Multi-Modal Recognition of Activities from Wearable Sensors

Harish Haresamudram, Chi Ian Tang, Sungho Suh, Paul Lukowicz, Thomas Ploetz

In UbiComp 2024 Companion

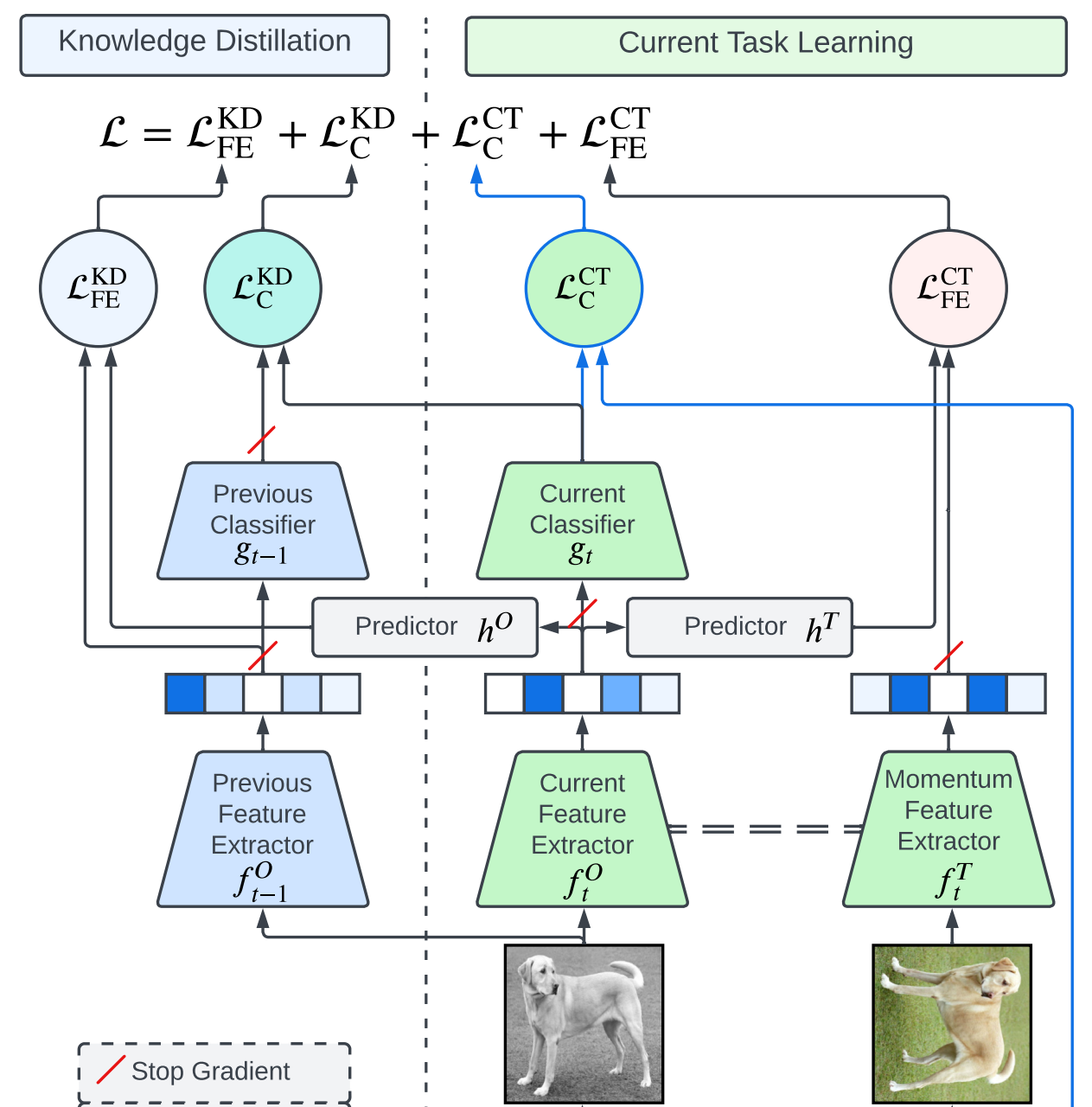

Balancing Continual Learning and Fine-tuning for Human Activity Recognition

Chi Ian Tang, Lorena Qendro, Dimitris Spathis, Fahim Kawsar, Akhil Mathur, Cecilia Mascolo

In AAAI 2024 Workshop: Human-Centric Representation Learning (HCRL)

Kaizen: Practical Self-Supervised Continual Learning With Continual Fine-Tuning

Chi Ian Tang, Lorena Qendro, Dimitris Spathis, Fahim Kawsar, Cecilia Mascolo, Akhil Mathur

In WACV 2024 (IEEE/CVF Winter Conference on Applications of Computer Vision)

2023

Self-supervised Learning for Data-efficient Human Activity Recognition

Chi Ian Tang

PhD Thesis

Solving the Sensor-based Activity Recognition Problem (SOAR): Self-supervised, Multi-modal Recognition of Activities from Wearable Sensors

Harish Haresamudram, Chi Ian Tang, Sungho Suh, Paul Lukowicz, Thomas Ploetz

In UbiComp/ISWC 2023 Adjunct

2022

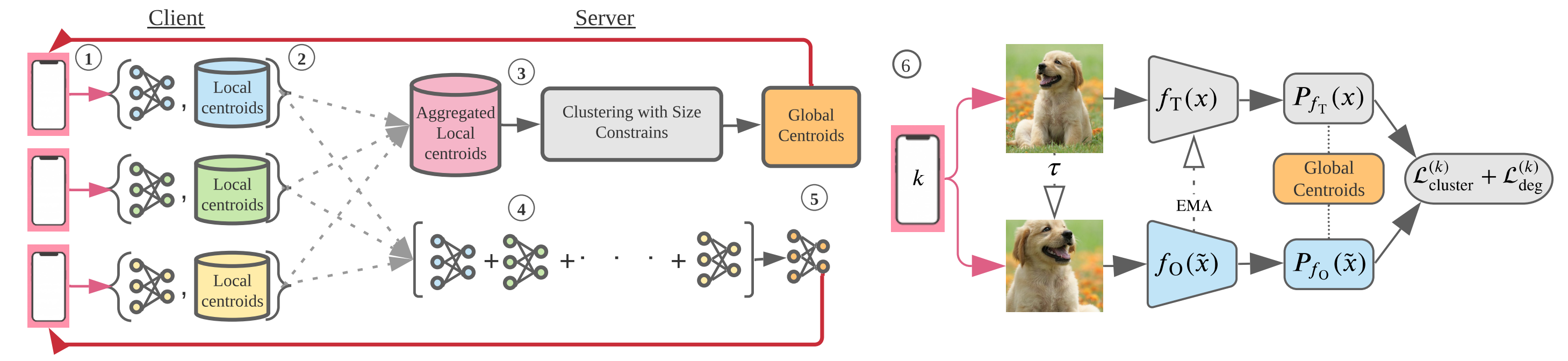

Orchestra: Unsupervised Federated Learning via Globally Consistent Clustering

Ekdeep Singh Lubana, Chi Ian Tang, Fahim Kawsar, Robert P. Dick, Akhil Mathur

In ICML 2022 (International Conference on Machine Learning)

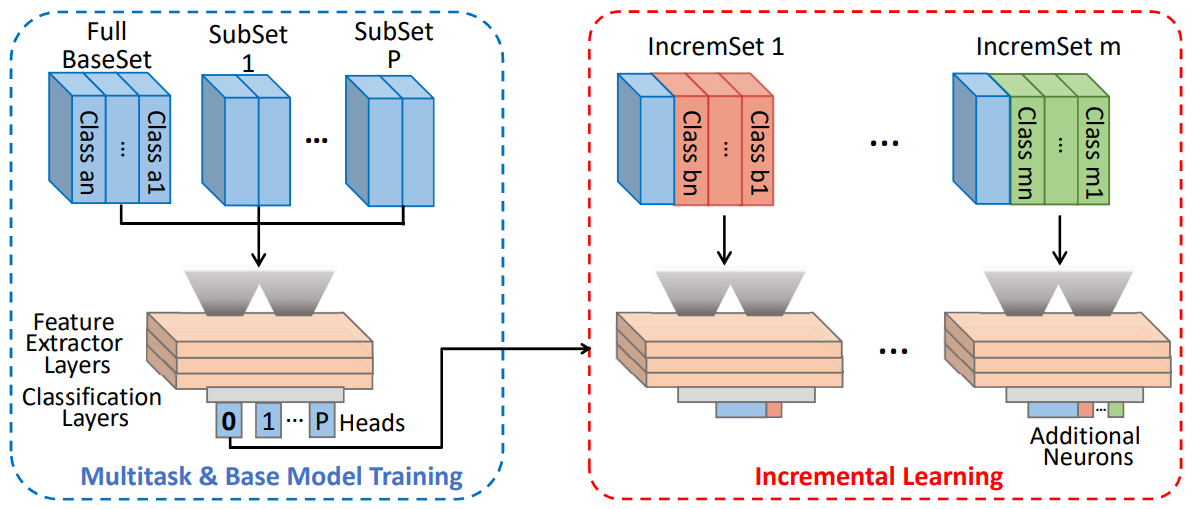

Improving Feature Generalizability with Multitask Learning in Class Incremental Learning

Dong Ma*, Chi Ian Tang*, Cecilia Mascolo

*Ordered alphabetically, equal contribution

In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2022

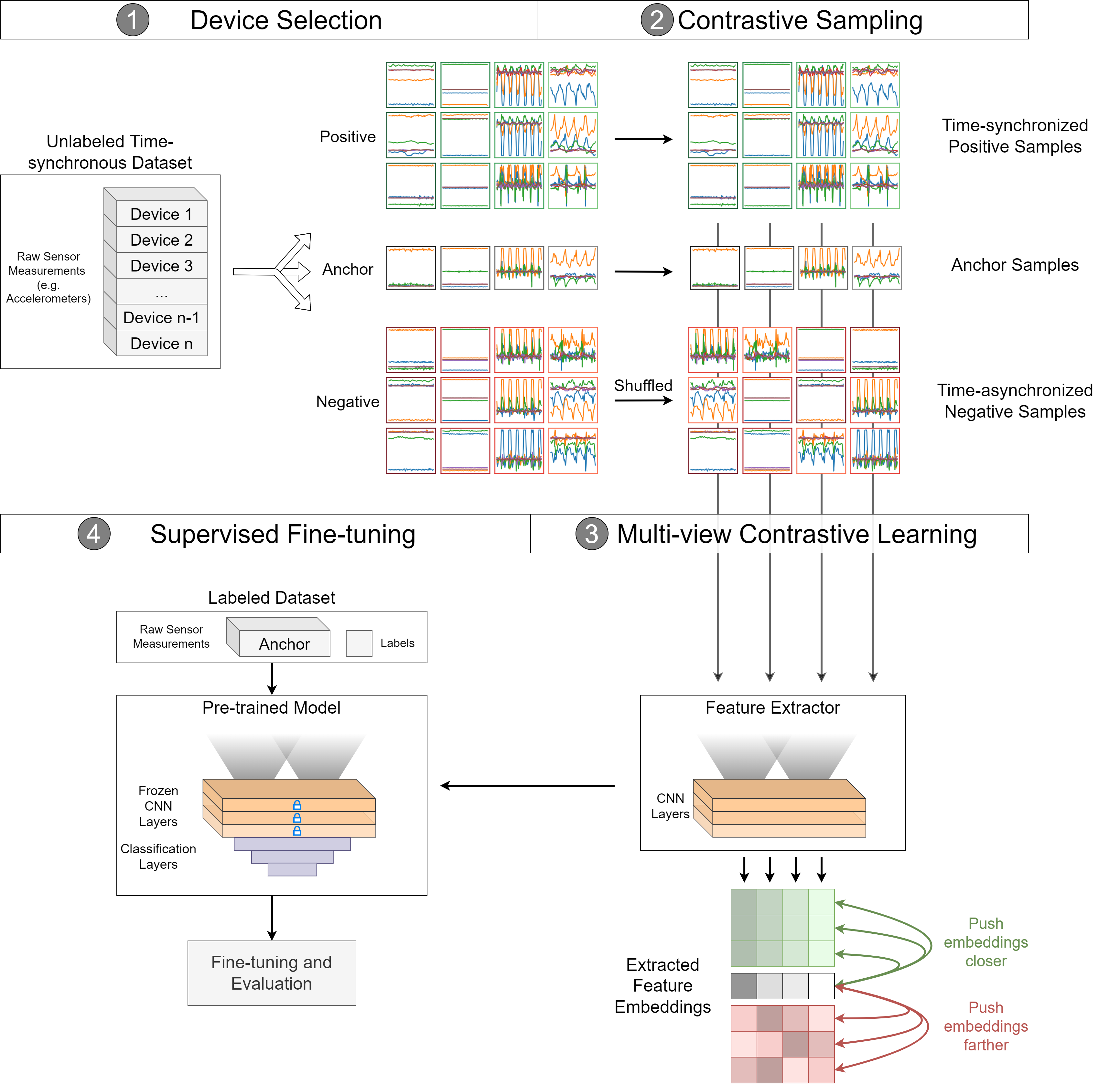

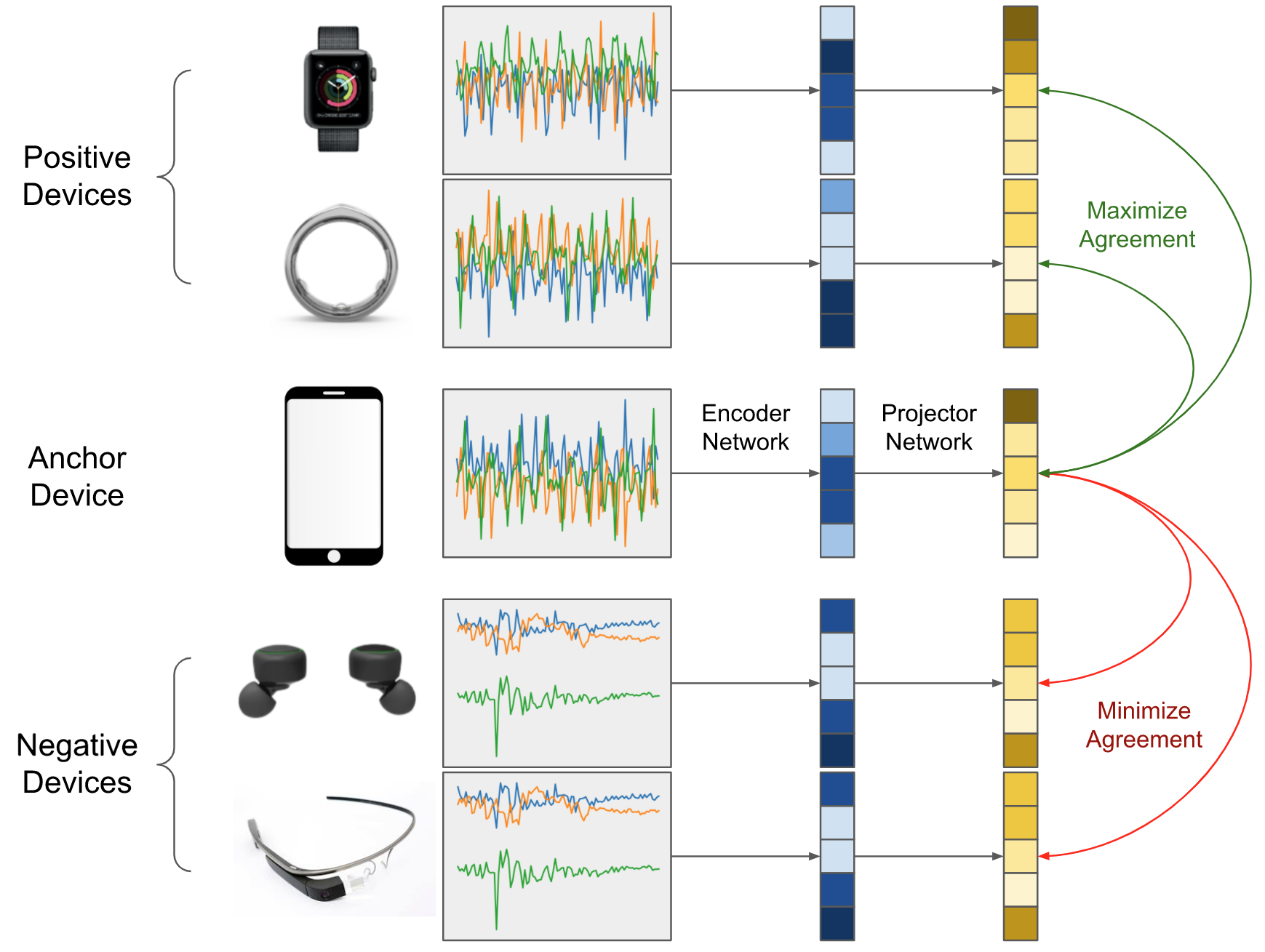

ColloSSL: Collaborative Self-Supervised Learning for Human Activity Recognition

Yash Jain*, Chi Ian Tang*, Chulhong Min, Fahim Kawsar, Akhil Mathur

*Ordered alphabetically, equal contribution

In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT). Volume 6 Issue 1, Article 17 (March 2022).

2021

Evaluating Contrastive Learning on Wearable Timeseries for Downstream Clinical Outcomes

Kevalee Shah, Dimitris Spathis, Chi Ian Tang, Cecilia Mascolo

In Machine Learning for Health (ML4H) 2021

Group Supervised Learning: Extending Self-Supervised Learning to Multi-Device Settings

Yash Jain*, Chi Ian Tang*, Chulhong Min, Fahim Kawsar, Akhil Mathur

*Equal Contribution

In ICML 2021 Workshop: Self-Supervised Learning for Reasoning and Perception

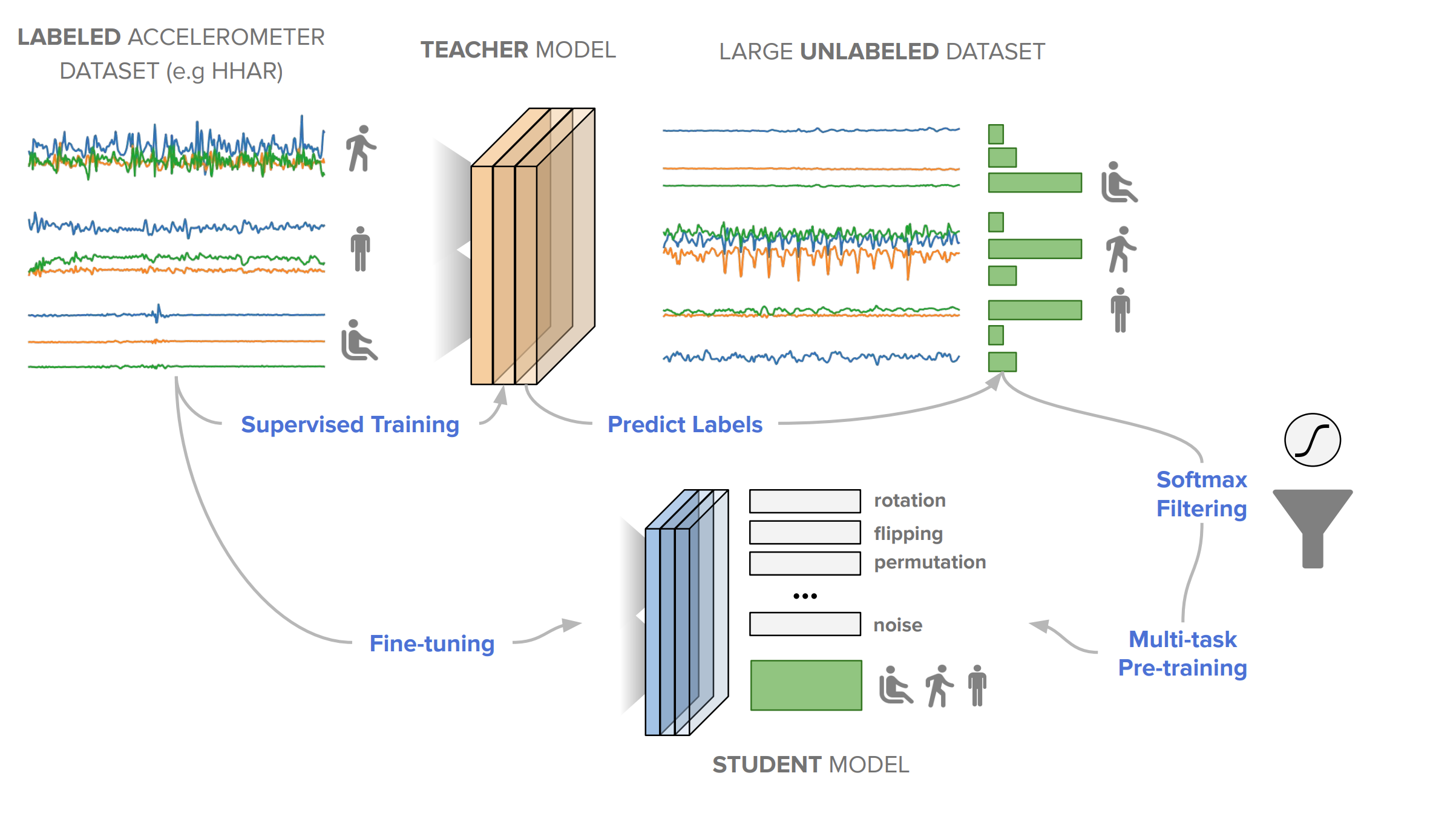

SelfHAR: Improving Human Activity Recognition through Self-training with Unlabeled Data

Chi Ian Tang, Ignacio Perez-Pozuelo, Dimitris Spathis, Soren Brage, Nick Wareham, Cecilia Mascolo.

In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT). Volume 5 Issue 1, Article 36 (March 2021).

2020

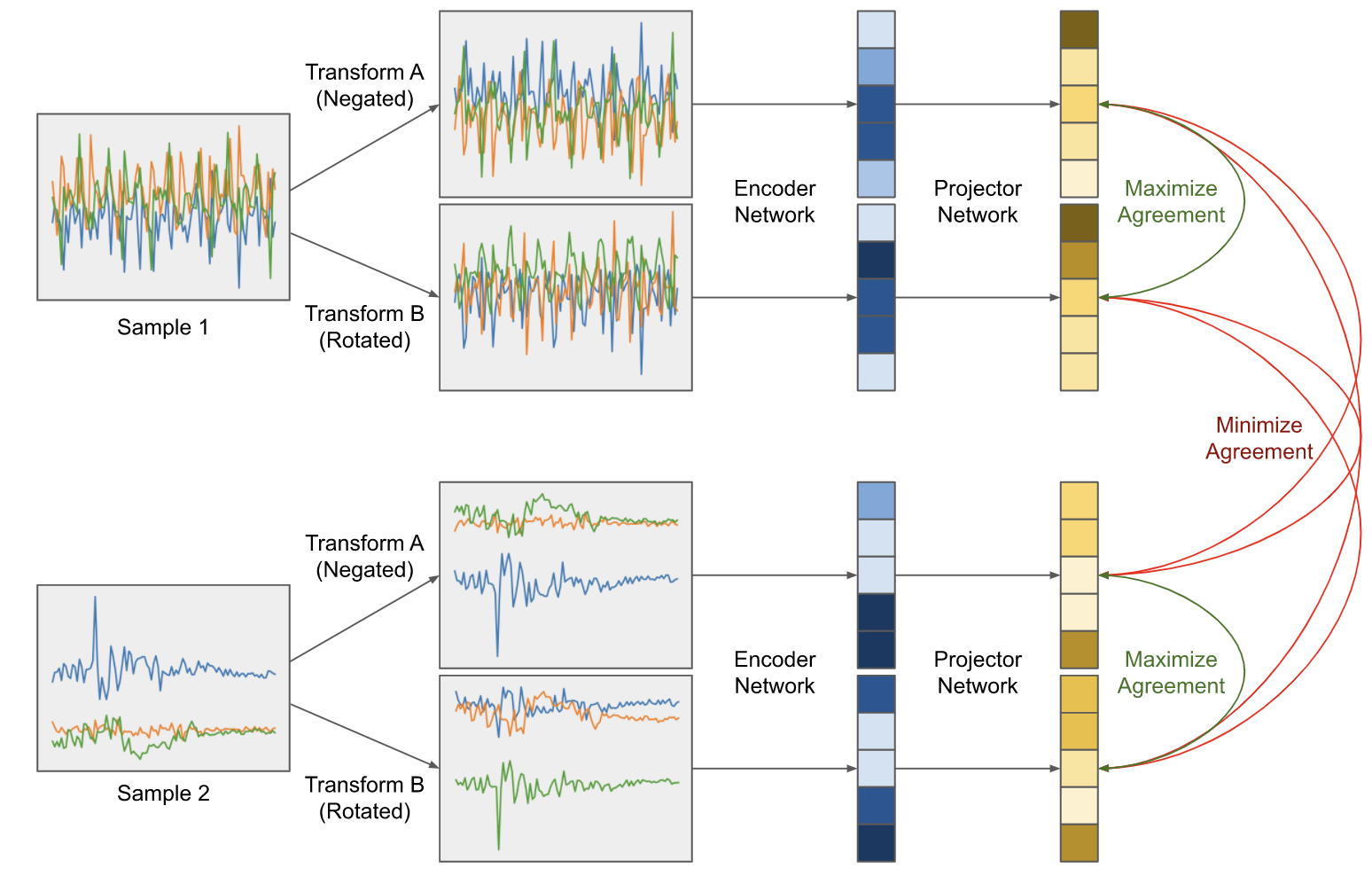

Exploring Contrastive Learning in Human Activity Recognition for Healthcare

Chi Ian Tang, Dimitris Spathis, Ignacio Perez Pozuelo, Cecilia Mascolo.

In ML for Mobile Health Workshop at NeurIPS. 2020.

Exploring the limit of using a deep neural network on pileup data for germline variant calling

Ruibang Luo, Chak-Lim Wong, Yat-Sing Wong, Chi-Ian Tang, Chi-Man Liu, Henry CM Leung, Tak-Wah Lam.

In Nature Machine Intelligence. 2020.

Academic Service

I have taken up roles for the following:

- Associate Editor of ACM IMWUT (2025-)

- Program Committee of MobiSys (2025)

-

Technical Program Committee of ABC (2025-) (Elite Reviewer) 🏆

- Co-chair of the Generative AI and Foundation Models for Human Sensing Workshop (GenAI4HS) at UbiComp 2025

- Organizer of UbiComp Tutorial on Solving the sensor-based activity recognition problem (SOAR) (2023 Cancun, Mexico, 2024 Melbourne, Australia)

- Organizer of HCRL workshop at AAAI 2024, Vancouver, Canada

Invited Talks

-

The Future of Wearable Health: Mobile AI Research at Nokia Bell Labs

At Korea University, South Korea, 2025 May 28 -

Data-Efficient AI and Multimodal Learning: Unlocking the Potential of Mobile Sensing for Health Insights

At University of Macau, Macao SAR, 2025 January 10

Mentoring and Teaching

Mentoring

I truly enjoy and always learn a lot in mentoring PhD students. Here are some research projects I have had the pleasure of supervising:

- Arnav Das (University of Washington): Multimodal learning for mobile sensing

- Florian Schmid (Johannes Kepler University Linz): Sound event detection

Lectures/Tutorials

I gave a lecture/tutorial on Machine Learning and Features of Health Data in the Mobile Health course (Master's level) at the Department of Computer Science and Technology, University of Cambridge. Slides are available Slides (2023)

Supervisions

I have supervised students and demonstrated for the following courses at the University of Cambridge:

- Programming in C and C++ (2020-2021)

- Machine Learning and Real-world Data (2019-2021)

- Computation Theory (2021-2024)

- Digital Electronics (2022-2023)

Teaching Materials

- How to Use the Y Combinator (for Computation Theory 2021-2022)

© 2020-2025 Chi Ian Tang. All rights reserved.